Getting a Hypergraph of Functions to a Browser

By John Obelenus

10/7/2025

A Peek Under The Hood

Infrastructure is a rich domain full of dependencies and relationships. To best support the domain, our underlying data model is a graph, not normalized data. You want changes in one element to ripple across the graph's relationships, impacting other elements. If you want to configure EC2 instances, it is better to set their region in one place and have all the instances get their region information from it.

Once you have this graph of relationships and enough components, changing small pieces will have significant consequences. The two most essential pieces of our product are performance and quality. Having a team of people see changes quickly, clearly, and correctly is the best product experience we could have, and the experience we need. The System Initiative product is a world modelling program, just like Unity is a world modelling program for gaming. To have a reliable model of the world, users need to see what is changing when it changes.

We call this "multiplayer". It isn't only about coordination and teamwork between people changes, but also the changes that AI agents make. Let’s not forget about having real-world changes to infrastructure reflected in the data model and displayed in the product, too. This is not like IaC. Where IaC is a single direction, taking a declaration in code and trying to reconcile it with the real world. System Initiative is a bi-directional real-time simulation of the world, with full fidelity baked in at every level.

As the amount of infrastructure we managed in the product increased, the sheer volume of data we were transferring became unsustainable, making it difficult to provide a positive user experience.

As the product's depth and breadth grew, the interface became a greater source of quality issues. Between loading data from user exploration, saved changes being sent to the client, and the active user making mutations to the data, we needed something beyond the "traditional" stored-based approaches.

We knew two things. First, we needed data persistence in the client. When a user returns to a workspace to view their infrastructure, they shouldn't need to load data from the API, as it is already available! Second, our front end required a reliable and consistent data model without tight coupling or re-implementing the graph data model from the back end.

SQLite To the Rescue!

WASM has been an answer that many engineering teams have used to power their browser clients, whether it's Figma, Adobe, AutoCad, Microsoft Blazor, or Notion. With all major browsers’ web workers supporting the Origin Private File System, we had our answer. We can store all the data we could need locally on the client in SQLite with very fast seek times. The most costly part of data access that impacts users is pulling data between the web worker thread and the main browser thread.

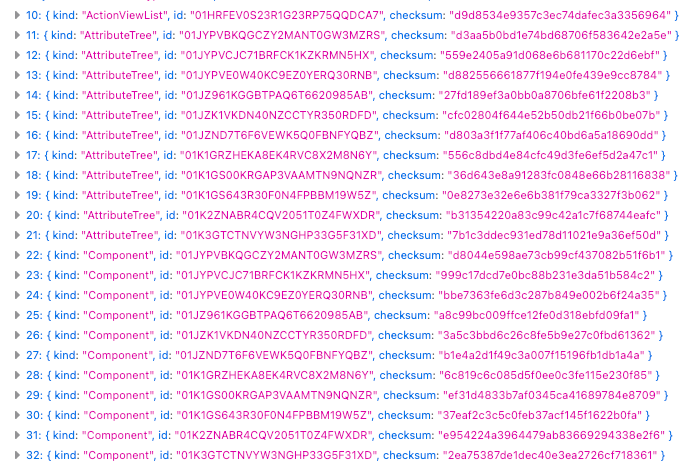

We drew inspiration from KV stores; the storage layer treats the value data as a JSON blob, which we refer to as atoms. The "key" is composed of three parts: the kind of data it is, its internal ID, and its checksum.

The System Initiative product uses change sets to power the simulation. The HEAD change set represents the real world. So all the components you are managing are on HEAD. When you create a change set, we copy all those components into another change set, allowing you to simulate your changes without affecting the real world.

Our client-side data storage prevents storing the same component multiple times across change sets when they are identical. A component that is on HEAD is stored once, and when you create a new change set, we reuse the same data reference. If you change that component in a change set, that makes a new reference. The component reference on HEAD remains the same, unchanged. Each change set has an index of all the components it contains.

A Front End Data Model

In many applications, the data models on the front end and the back end are close to identical. Our graph data model is optimized for the tasks we need to perform in Rust, as well as for storage & retrieval. The majority of it isn't required for the client interfaces. However, we didn't want to reimplement a graph that needed to match a Rust implementation in JavaScript.

Instead, we created materialized views of the data. Anyone working on the product interface can define the shape of the data they want to work with. They define it based on the Rust graph access. And the resulting JSON blob will be assembled and kept up to date by the system. There is zero problem duplicating data across materialized views. We intentionally do not create a "normalized" view of the data that is joined in SQL queries. Each view (or atom on the front end) is fully encapsulated to meet the specific user interface needs.

pub struct ComponentInList {

pub id: ComponentId,

pub name: String,

pub color: Option<String>,

pub schema_name: String,

pub schema_id: SchemaId,

pub schema_variant_id: SchemaVariantId,

pub schema_variant_name: String,

pub schema_category: String,

pub has_resource: bool,

pub resource_id: Option<Value>,

pub qualification_totals: ComponentQualificationStats,

pub input_count: usize,

pub diff_status: ComponentDiffStatus,

pub to_delete: bool,

}

This data model, defined in Rust, pushes every graph mutation up to every client through a WebSocket for every change set, and we store it all in SQLite. When each client reconnects, it references the change set index (the list of all atoms) and reconciles any items that are out of date. In a real way, this is a highly flexible and robust cache of data. The system notifies each client when its data has mutated.

The main browser thread, where our Vue application code is executed, uses TanStack to mediate data access, which also caches the data in the main thread memory. Every user's access to this data involves a local data access call to SQLite, rather than a network connection.

const componentQuery = useQuery<BifrostComponent | undefined>({

queryKey: key(EntityKind.Component, componentId),

queryFn: async (queryContext) =>

(await bifrost<BifrostComponent>(

args(EntityKind.Component, componentId.value),

)) ??

queryContext.client.getQueryData(

key(EntityKind.Component, componentId).value,

),

});

This design removes a significant amount of accidental complexity typically associated with API access, not to mention the dozens of endpoints we've deleted since implementing this architecture.

A Realization

Our interface has achieved a level of performance for our users rarely seen in other web applications. Our team has realized a level of productivity and maintainability that has surprised everyone.

And it became clear to me, after some reflection, that our underlying graph database implementation made this all possible in a far easier way than with a typical, normalized, relational data model.

John Obelenus, Software Engineer III

John has been building complex and interactive web-based systems and products that run companies for nearly two decades.